A few months before Yoshua Bengio’s participation in the SNF Nostos Conference on August 26 – 27, 2021, on “Humanity and Artificial Intelligence,” we discussed with him the future of “intelligent” computer systems, the effort to teach AI some common sense, and the possibilities and challenges of using such technologies.

Author’s Note: We used Artificial Intelligence

systems for both transcribing and correcting

the English text before publishing this interview.

Yoshua Bengio is recognized as one of the world’s leading experts in artificial intelligence and a pioneer in deep learning. In 2019, he received the Killam prize as well as the ACM A.M. Turing Award, “the Nobel Prize of Computing”, jointly with Geoffrey Hinton and Yann LeCun for conceptual and engineering breakthroughs that have made deep neural networks a critical component of computing.

Lab: Can you tell us in simple words what artificial intelligence is for those of us who are not familiar with computer science?

Yoshua Bengio: Humans possess mental abilities, which we would like computers to have, so that’s basically what Artificial intelligence research is trying to achieve. Μany directions have been explored for achieving this, but one thing I want to mention is, because we’re trying to build these kinds of mental abilities in machines, often we take inspiration from human or animal intelligence.

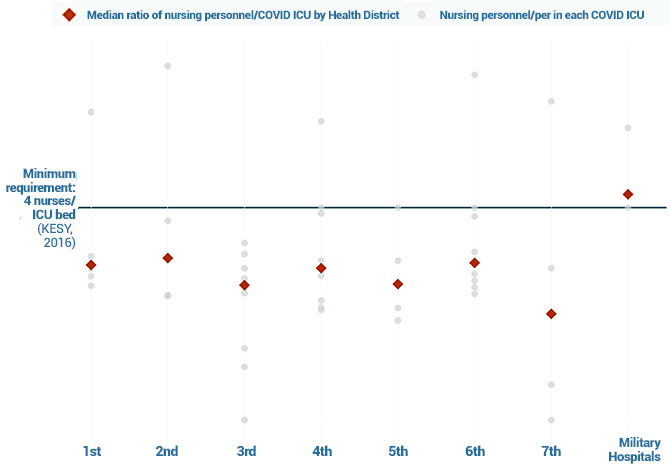

Understaffed COVID ICUs and the association between fatalities and Greek hospital occupancy

An X-ray of the Greek Health System during the pandemic shows that many of the COVID-19 ICUs across the country are understaffed and demonstrates a correlation between deaths and hospital occupancy during the “second wave”. The lesson of Northern Greece for Attica.

You think a lot about AI might help Humanity. What are some of the most important problems AI can help us address?

AI is not a finished product or a system that exists somewhere. Many different approaches, many algorithms, and many methods are still in their infancy in some ways. But AI has already been used in many sectors of the economy and will continue in the future. Using a smartphone, a search engine, social media, or making purchases online, all of these activities are driven by AI under the hood. But what I find really exciting is that a lot of work that’s happening is in developing new kinds of applications driven by social needs. For example, in healthcare, in fighting climate change, in education, in potentially improving agriculture. What’s exciting is that many of these AI tools being developed are generic and can be applied across many areas. What I find very motivating is the work done in academia and governments to look for AI applications that currently the industry is not looking at, maybe because it’s not profitable. But it’s important for society.

Artificial Intelligence will help us discover new drugs

You mentioned before Health Care. Can you give us some examples on how AI might help? For instance, in developing new drugs?

Yes, actually, this is something I’ve been working on over the last year motivated by the pandemic. Indeed, the problem with drug discovery right now is that it’s very expensive and very slow. It takes something like ten years to discover a new drug. When we have a pandemic or some contagious diseases in developing countries that we can’t control and millions of people are dying, when you consider issues like the antibiotic’s resistance, which is a great threat to Humanity, then having the technology to speed up the discovery process could be immensely useful for our society. One area that I’m focusing on is the research for candidate drugs, which can be automated. On the one hand, we can collect more data with new techniques, and on the other hand, we can analyze that data to propose new candidate drugs. This research could be in collaboration with chemists and biologists who have their ideas of where to explore. And that’s where AI can use the data collected to come up with new directions for potential drugs.

What’s holding us back from using AI to its fullest extent to help Humanity;

One issue is that expertise and capital to develop useful AI applications are concentrated in a few places in the world. So we need to do a much better job to democratize that knowledge and make some of the datasets that are being produced more easily accessible. It’s a big issue in healthcare either because the data is generated in companies, which prefer to keep that data secret because it’s a competitive edge, or because of privacy issues. Also, we need a broader education across the world, particularly in AI, so that young people in developing countries can also participate in developing their economies using AI. Finally, there is a barrier that has to do with the inertia of our societies. Governments don’t change regulations easily. Companies don’t change the way they do things easily. Our economic system favors some types of applications, but not necessarily all of those with great social importance, like drug discovery. Antibiotics are not being explored very much by the industry, and there’s a huge need for that.

Media and Digital Opportunities in Greece

The viability of the Media, the role of journalists in the age of artificial intelligence, and the fight against disinformation (fake news).

Exaggerated expectations of the ‘intelligent’ computer systems

What is the most overhyped aspect of AI today? And what is the most underappreciated thing?

Unfortunately, a lot of Science Fiction is depicting AI in a not representative way. AI is not magic. The current AI systems are very far from Human-level intelligence. So, there is a tendency to put too much trust or expect too much from today’s AI systems. In the future if we don’t self-destruct because of the environment, there is no reason to believe that we won’t be able to build machines at least as intelligent as humans. But, now we’re very far from that, and we can’t put a date on it.

Another kind of misconception is more like general misconceptions about science. In science fiction, you see these lonely scientists making huge discoveries alone somewhere in an isolated place. That is not how science works. Science advances in small steps, and it advances through collective efforts. We build on each other. We work in groups. This is very different from the picture you see in science fiction.

Are we far from becoming a “Star Trek society”? A society that will have the advancements we see in the Star Trek movies and series? You had described Star Trek, in a past interview, as a democratized and technologically advanced society.

Right. Many people may not have noticed, but in Star Trek, the economic system is very different. Everybody has enough, in a material sense, in their life. And what becomes important (for them) is to do something (through their work) to contribute to society.

Star Trek, especially Next Generation, is introducing some futuristic views about AI in terms of technology. I think it’s a bit caricature, but it’s not as bad as what I’ve seen in other science fiction novels or movies.

Artificial intelligence has experienced periods during which public interest investment and demand collapsed and then periods with substantial innovations. I’m referring to the so-called AI winter and summer. We are currently in a blazing summer. Could another winter be ahead, though?

It’s always possible, but I believe that we have passed a critical milestone. So until the last few years, AI was mainly happening in academia, and it wasn’t very profitable in the industry. I mean, of course, like in the 90s, I remember there were startups claiming to do things with AI and expert systems and so on. Still, it never really took off because the technology hadn’t reached a threshold level performance that would allow significant profitability. But we have passed that threshold now.

There will always be companies investing in AI and AI products for the foreseeable future and, of course, students wanting to study it because they’ll get a job. In fact, there’s a vast gap between the demand from industry and how many students are being graduated who have that required expertise. So there may be ups and downs in the industry, but AI is here to stay.

We need to develop AI systems with “common sense”

We live in a time of huge models like GPT 3 that uses deep learning to produce human-like text. These models sometimes have stunning results but seem nothing like how human beings learn. Are they pushing us in the right or wrong direction?

It depends on what your goal is. If you have a short-term goal of improving existing products that use natural language, for example, in understanding or comparing texts, as it’s needed in search engines, these efforts are good. The more data we put in, and the bigger the models are, the better the performance. However, I don’t think that scaling up is sufficient here. Even using all of the text written by Humanity since the beginning of time, it wouldn’t be enough to build a machine that understands natural language. So, we need to go back to the drawing board and build systems exposed not only to text as data but also to videos or even interaction with the world, maybe through robots or virtual environments. To build a model that understands the world. Model-based reinforcement learning is trying to achieve this kind of thing. It is trying to develop models that understand their environment.

Pay up or put it off: how Europe treats depression and anxiety

In many European countries, the availability of psychological treatment in the public healthcare system is inadequate or even non-existent.

You mean systems with basic common sense, if I understand correctly.

Exactly.

Our society is not ready for a human-level artificial intelligent system

How far are we from developing these systems and how far are we from Artificial General Intelligence?

I don’t have a crystal ball. Science moves in small steps, and sometimes you cross a threshold that could be a breakthrough, but it’s hard to predict when this will happen.

I don’t like the term Artificial General Intelligence. Let me explain why. As it was initially defined and as it may suggest, this term talks about a completely general form of intelligence. But we have mathematical reasons and a lot of empirical evidence to suggest that there is no such thing as completely general intelligence. Human intelligence is not completely general. We’re good at some things, and we are bad at others. So our intelligence is fairly general, but it’s not completely general. So, I prefer to use the term Human-Level Intelligence. So now, going back to your question, when do we reach that? I don’t think it’s going to be a year where this is going to happen. Instead, we’re going to see progress on different aspects of intelligence. The progress is not going to be equal on all of the human abilities.

You mean systems that can adapt to change since this is one factor that distinguishes humans from machines, right?

Machines can also adapt to change, but we do a lot better. We can adapt very quickly to change. And that is one of the exciting research areas currently in machine learning.

Is our society ready to welcome such systems, Human-Level Intelligence systems, as you described them before?

I don’t think so. I believe that we don’t have the individual and collective wisdom to make sure that very powerful technologies like AI or biotechnology are used in accordance with our values, are used in a way that’s going to benefit Humanity in general and not create catastrophic outcomes. Powerful technologies can be abused and exploited by some people who have power. So it could lead to a concentration of power, a concentration of wealth. And that is not good for democracy. It can lead to outcomes that are bad for the environment, for human rights. We need to take our time to set up the proper regulations nationally and internationally. We need to invest more in AI’s good side, for example, AI for good social applications. But also, we need to avoid a backlash. Because if the AI is used in ways that most people start to perceive is bad for them, for example, for surveillance, control, or manipulating people through advertising, they will reject technology and science, as we are already seeing that kind of thing happening. So it’s vital for our societies’ progress that we are careful in the way we manage the deployment of technology.

SNF Nostos Conference on “Humanity and Artificial Intelligence” will take place on August 26 – 27, 2021.